In the intricate world of graduate medical education (GME), ensuring excellence, compliance, and continuous improvement isn’t merely a goal—it’s a foundational imperative. Every residency and fellowship program across the United States operates under the watchful eye of the Accreditation Council for Graduate Medical Education (ACGME), an organization dedicated to enhancing the quality of physician training and, by extension, patient care. Central to this mission is a rigorous, ongoing self-assessment process that culminates in what many program leaders know as the annual program evaluation. It’s more than just a bureaucratic hurdle; it’s a vital diagnostic tool for program health and a roadmap for future development.

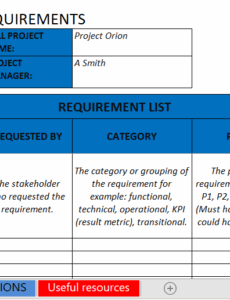

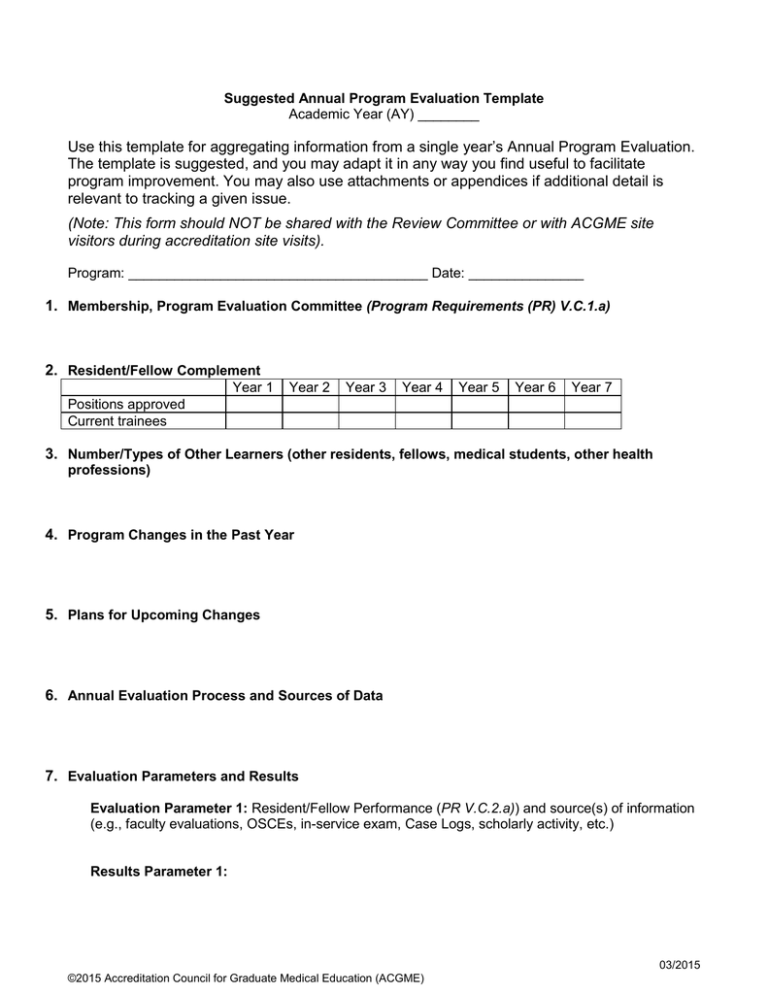

The annual program evaluation serves as the crucible where a program’s performance, educational outcomes, and operational effectiveness are meticulously reviewed. It’s an opportunity for program directors, faculty, and residents to collectively reflect on the past year, identify strengths, pinpoint areas for improvement, and strategize for the future. While the ACGME provides clear guidelines on what needs to be evaluated, the how often involves adapting or creating a comprehensive framework. This is where the concept of an Acgme Annual Program Evaluation Template becomes invaluable, offering a structured approach to gathering, analyzing, and acting upon critical data that shapes the next generation of physicians.

The Cornerstone of Quality GME: Why Annual Evaluation Matters

The commitment to continuous improvement is etched into the very fabric of ACGME accreditation. For any GME program, the annual program evaluation isn’t just a requirement; it’s the engine that drives quality enhancement. This systematic review allows programs to critically assess their educational environment, curriculum, faculty effectiveness, and resident outcomes against established benchmarks and ACGME Program Requirements. Without this disciplined annual cycle, programs risk stagnation, failing to adapt to evolving medical knowledge, pedagogical best practices, or the changing needs of the healthcare system.

Moreover, a robust program evaluation process fosters a culture of accountability and transparency. It ensures that programs are not only meeting minimum accreditation standards but are actively striving for excellence in all facets of resident education and patient safety. This regular introspection helps identify educational gaps, resource deficiencies, and potential resident well-being concerns before they escalate. It’s about proactive management and strategic planning, rather than merely reactive problem-solving, ensuring that the learning environment remains optimal for both trainees and educators.

Navigating ACGME Requirements: What Every Program Director Needs to Know

ACGME Program Requirements stipulate that each residency and fellowship program must conduct an annual program evaluation. This evaluation must involve the program director, faculty, and resident representatives. The purpose is clear: to review the program’s aims, evaluate the extent to which these aims are being achieved, and identify opportunities for improvement. The results of this evaluation must be documented and subsequently used to develop a written action plan for the coming academic year. This plan, and the follow-up on its implementation, are critical aspects that the ACGME scrutinizes during site visits.

Program directors are at the helm of this crucial process. They are responsible for ensuring that all required components are addressed, that data is collected comprehensively, and that the evaluation leads to meaningful change. Common pitfalls often include superficial reviews, a lack of resident input, failure to develop specific and measurable action plans, or neglecting to track the implementation and effectiveness of those plans. Understanding the nuances of these requirements and integrating them into a systematic annual review process is paramount for maintaining accreditation and fostering a high-quality educational experience.

Key Components of a Robust Program Evaluation

An effective annual program evaluation should be comprehensive, data-driven, and forward-looking. While the ACGME does not prescribe a specific template, it does outline the essential areas that must be assessed. Developing a clear framework, akin to an Acgme Annual Program Evaluation Template, helps ensure all bases are covered. This typically involves reviewing various aspects of the program and its impact.

- **Program Aims and Objectives:** Are the program’s stated aims clear and measurable? Are they being met?

- **Curriculum Review:** An assessment of the educational content, learning experiences, didactic sessions, and rotations. Is it up-to-date and comprehensive?

- **Faculty Performance:** Evaluation of teaching effectiveness, mentoring, scholarly activity, and compliance with ACGME faculty requirements.

- **Resident Performance and Outcomes:** Review of in-training examination scores, board pass rates, clinical competency assessments, scholarly work, and resident well-being metrics.

- **Educational Environment:** Assessment of the learning climate, institutional support, patient volume and diversity, and overall resident experience, including issues of harassment, mistreatment, and professionalism.

- **Resources:** Evaluation of physical resources, educational technology, library access, and support staff adequacy.

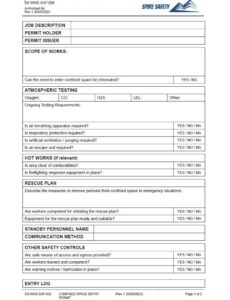

- **Patient Safety and Quality Improvement:** Review of resident participation in patient safety and quality improvement initiatives, and how these experiences integrate into the curriculum.

- **Action Plan Implementation:** A critical look at the effectiveness of action plans from previous evaluations. Were the identified improvements achieved?

Each of these components requires a systematic approach to data collection and analysis. This might involve surveys, focus groups, direct observation, performance data, and structured discussions among stakeholders.

From Data Collection to Action: Implementing Your Evaluation Findings

The true value of any annual program evaluation lies not just in identifying areas for improvement, but in translating those insights into tangible action. A pile of data, no matter how meticulously collected, is useless without a clear, executable action plan. Once the various components of the GME program have been assessed, the program leadership team, including faculty and resident representatives, must convene to discuss the findings. This collaborative discussion is vital for ensuring buy-in and generating practical solutions.

The resulting action plan should be specific, measurable, achievable, relevant, and time-bound (SMART). It should clearly delineate who is responsible for each task, what resources are needed, and by when the task is expected to be completed. For instance, if the evaluation reveals that residents are struggling with a particular aspect of patient management, the action plan might involve revising didactic sessions, creating new simulation modules, or enhancing mentorship opportunities in that specific area. Regular follow-up on the action plan throughout the year is essential to ensure progress and make necessary adjustments. This iterative process of evaluate-plan-act-monitor forms the backbone of continuous quality improvement.

Crafting Your Program Evaluation Tool: Practical Tips for Customization

While the core elements of a program’s annual review are mandated by the ACGME, the specific instrument or template used can vary widely. Many institutions develop their own comprehensive evaluation framework that integrates ACGME requirements with local institutional policies and priorities. For smaller programs or those just starting, adapting an existing annual program review template can be incredibly helpful. The key is to create a tool that is comprehensive yet user-friendly, ensuring all necessary data points are captured without overwhelming participants.

Here are some practical tips for developing or refining your program evaluation framework:

- Start with the ACGME Program Requirements: Go through your specific specialty’s program requirements section by section, identifying every area that requires annual review or data collection. This forms the backbone of your evaluation instrument.

- Incorporate Institutional Requirements: Many Sponsoring Institutions (SIs) have their own GME policies and quality improvement initiatives that need to be integrated into the program’s annual assessment.

- Define Data Sources: For each component, clearly identify where the data will come from (e.g., resident surveys, faculty evaluations, board pass rates, procedure logs, patient safety reports, alumni surveys).

- Engage Stakeholders Early: Involve faculty, residents, and relevant institutional staff in the development or review of your evaluation tool. Their input ensures relevance and promotes engagement in the process.

- Utilize Technology: Consider using online survey platforms or GME management systems to streamline data collection, aggregation, and reporting. This can significantly reduce administrative burden.

- Focus on Usability: Ensure the document or platform is easy to navigate, with clear instructions and logical flow. Avoid overly technical jargon where plain language suffices.

- Prioritize Actionability: Design the evaluation to directly inform an action plan. The questions asked and data collected should lead to actionable insights, not just descriptive statistics.

- Review and Revise Annually: The program evaluation process itself should be evaluated. Are there questions that are no longer relevant? Are there new areas that need to be assessed? Continuous refinement is key.

Remember, the goal is not merely to complete an Acgme Annual Program Evaluation Template, but to genuinely understand your program’s strengths and weaknesses, and to foster an environment of ongoing learning and improvement.

The Impact Beyond Compliance: Elevating Resident Experience and Patient Care

The annual program evaluation is far more than a checklist for accreditation. Its true impact resonates throughout the entire GME ecosystem, profoundly influencing resident well-being, faculty development, and ultimately, the quality of patient care delivered. By systematically evaluating the learning environment, programs can identify and address issues related to resident workload, professional development, and emotional support. This commitment to a healthy and supportive environment directly contributes to the well-being of residents, reducing burnout and fostering a more engaged and effective physician workforce.

Furthermore, a rigorous program assessment naturally ties into continuous quality improvement in patient care. When programs review their patient safety metrics, resident involvement in quality initiatives, and how their curriculum addresses population health needs, they are directly enhancing the preparedness of their trainees to be leaders in quality and safety. Residents who are trained in an environment of constant critical self-assessment and improvement are better equipped to carry these practices into their future careers, thereby elevating healthcare standards across the board. The annual program evaluation, therefore, stands as a testament to GME’s commitment to excellence, ensuring that the physicians of tomorrow are not just skilled, but also reflective, adaptable, and dedicated to the highest standards of care.

The comprehensive and thoughtful execution of a program’s annual evaluation is a testament to its commitment to excellence. It’s a continuous journey, not a destination, one that demands dedication, collaboration, and a willingness to adapt. By embracing the spirit of the Acgme Annual Program Evaluation Template and leveraging it as a dynamic tool for self-reflection and strategic planning, programs can not only meet accreditation standards but also significantly enhance the quality of education they provide, the well-being of their residents, and the safety of the patients they serve. This iterative process of evaluation and improvement ensures that graduate medical education remains at the forefront of healthcare innovation and quality.