In the complex world of data, where information flows ceaselessly through myriad systems, the process of Extract, Transform, Load (ETL) stands as a critical backbone for analytics, reporting, and operational intelligence. Yet, despite its foundational importance, the initial phase of defining *what* needs to be extracted, *how* it should be transformed, and *where* it should be loaded often presents the most significant hurdles. Without a clear roadmap, data projects can quickly derail, leading to costly reworks, missed deadlines, and ultimately, a distrust in the very data intended to provide clarity.

This is where a robust and thoughtfully designed Etl Requirements Gathering Template becomes an indispensable asset. It’s more than just a document; it’s a strategic tool that brings structure, clarity, and consistency to the intricate process of defining data integration needs. By standardizing the approach to capturing every detail from source systems to transformation logic and data quality rules, this template empowers teams to build data pipelines that are not only efficient but also precisely align with business objectives, fostering better communication and significantly reducing project risks for everyone involved, from data engineers to business stakeholders.

The Unseen Hero: Why a Robust Requirements Process Matters

Many data projects face significant challenges not because of technical limitations, but due to a fundamental lack of clarity in their initial requirements. Vague specifications lead to assumptions, which in turn lead to misunderstandings and solutions that don’t quite hit the mark. The ripple effect of poor requirements can be devastating, resulting in endless iterations, budget overruns, and a general loss of confidence in the data team’s ability to deliver.

A structured requirements process, guided by a well-defined framework, acts as the unsung hero in this scenario. It compels teams to ask the right questions at the right time, ensuring that every detail is considered and documented. This proactive approach helps to uncover potential issues early, before they become entrenched in the development cycle, thereby saving considerable time and resources in the long run. It transforms abstract business needs into concrete, actionable specifications that data professionals can build upon with confidence.

Furthermore, a comprehensive framework for defining ETL needs fosters a common understanding among all project participants. When business users, data architects, and developers share a single, detailed document outlining the extract, transform, load specifications, ambiguity is minimized. This shared understanding is vital for successful project execution and for ensuring that the final data solution truly addresses the organization’s strategic goals.

Benefits of a Structured Approach to ETL Requirements

Adopting a standardized approach to gather data integration requirements offers a multitude of advantages that extend beyond mere project efficiency. It lays a solid foundation for data governance and quality, ensuring that the data assets created are reliable and trustworthy.

One of the primary benefits is enhanced clarity and accuracy. By providing predefined sections and prompts, a template ensures that no critical piece of information is overlooked. This systematic capture of detail leads to more precise data mapping and transformation logic, reducing the likelihood of errors and discrepancies in the final data.

Another significant advantage is improved communication and collaboration. When all stakeholders contribute to and refer to a single source of truth, discussions become more focused and productive. This shared understanding minimizes misinterpretations and helps align expectations across diverse teams, from business analysts to technical developers.

Moreover, a standardized approach significantly reduces rework and development cycles. Identifying and documenting requirements thoroughly upfront means fewer surprises during development and testing. This proactive issue identification translates to faster project completion and more efficient use of resources, directly impacting the bottom line.

Finally, such a framework supports better decision-making and strategic alignment. By clearly articulating the business drivers behind each data requirement, the template helps ensure that the data pipeline is not just technically sound, but also strategically valuable, delivering the insights necessary for informed business decisions.

Key Components of an Effective ETL Requirements Gathering Template

To be truly effective, an Etl Requirements Gathering Template must be comprehensive, covering every facet of the data journey from source to destination. While specific sections may vary slightly based on project complexity and organizational standards, certain core elements are universally critical.

Here are the essential components typically found in a robust data integration requirements template:

- Project Overview and Scope: Clearly define the project’s objectives, its boundaries, and the key business problems it aims to solve. This section sets the context for all subsequent details and ensures everyone understands the “why.”

- Stakeholder Identification: List all individuals and groups who have an interest in or impact on the data project. This includes identifying their roles, responsibilities, and involvement in the requirements process, ensuring that all perspectives are considered.

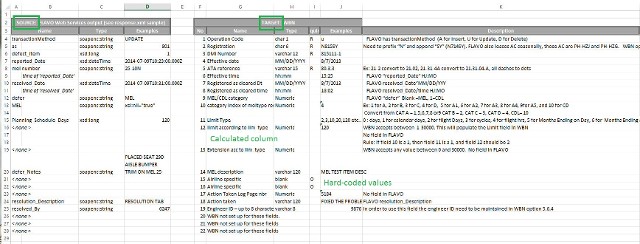

- Source System Details: Document comprehensive information about the data sources. This includes system names, database types, connection details, data volumes, update frequencies, data retention policies, and any known data quality issues or limitations.

- Target System Design: Specify the details of the destination system where the transformed data will reside. This involves defining the target database schema, table structures, data types, indexing strategies, and naming conventions.

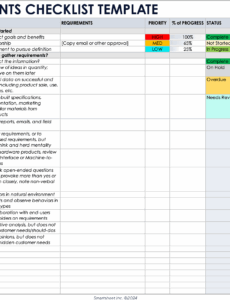

- Data Mapping Specifications: This is often the heart of the template. It provides a detailed, column-level mapping from source attributes to target attributes. Each mapping entry should specify the source column, target column, data type conversions, default values, and any relevant business rules or transformation logic.

- Transformation Logic: Explicitly describe all business rules, calculations, aggregations, data cleansing routines, and derivations required to transform the source data into the desired target format. This section should be detailed enough for a developer to implement without ambiguity.

- Data Quality Rules and Validation: Define specific rules for validating data integrity and quality. This includes checks for completeness, uniqueness, consistency, accuracy, and validity. Outline how errors should be handled, logged, and reported.

- Error Handling and Logging: Detail the procedures for detecting, logging, and reporting errors during the ETL process. Specify error thresholds, notification mechanisms, and rollback strategies to maintain data integrity.

- Performance Requirements: Document expectations regarding data latency, processing throughput, load window times, and scalability. This is crucial for designing an ETL solution that meets operational demands.

- Security and Compliance: Outline security protocols, data access restrictions, encryption requirements, and compliance mandates (e.g., GDPR, HIPAA, CCPA) that apply to the data throughout its lifecycle.

- Testing Strategy and Acceptance Criteria: Define how the ETL solution will be tested, including unit, integration, and user acceptance testing (UAT). Specify the success criteria that must be met for the solution to be approved and deployed.

- Deployment and Operational Requirements: Document the procedures for deploying the ETL solution, scheduling jobs, monitoring performance, and ongoing maintenance. This ensures a smooth transition from development to production.

Putting the Template into Action: A Step-by-Step Guide

Having an excellent data pipeline design documentation is only half the battle; the real value comes from effectively utilizing it. Implementing a requirements gathering template isn’t a one-off task but an iterative process that requires engagement and collaboration from all parties.

The first step is preparation and scope definition. Before even opening the template, clearly define the project’s objectives and scope. Assemble the core team, including business analysts, data architects, and domain experts. Ensure everyone understands the specific goals and boundaries of the data integration project.

Next comes requirements elicitation. This is where the bulk of the information is gathered. Conduct interviews with stakeholders, facilitate workshops, and review existing documentation using the template as your guide. The template’s structured sections prompt specific questions, ensuring a comprehensive capture of needs. For instance, when discussing source systems, use the template to systematically ask about data types, volumes, and access methods.

Following elicitation, the focus shifts to documentation and refinement. Populate the template with all the gathered information. Be precise and avoid ambiguity. For complex transformation logic, use examples or pseudo-code to clarify. This phase often involves multiple rounds of discussions to clarify details and resolve inconsistencies. The extract, transform, load specifications should be as clear as possible.

Once documented, the requirements need review and approval. Share the completed data project requirements with all relevant stakeholders. Conduct formal review sessions to walk through each section, addressing any questions or concerns. Obtain formal sign-off from key business and technical stakeholders to confirm that the document accurately reflects their needs and expectations.

Finally, understand that requirements are iterative. Even with a robust template, business needs and data landscapes can evolve. The template should be a living document, updated as new requirements emerge or existing ones change throughout the project lifecycle. Regularly revisit and revise the data warehousing requirements document to maintain its accuracy and relevance.

Common Pitfalls to Avoid

Even with a powerful Etl Requirements Gathering Template, there are common missteps that can undermine the entire process. Being aware of these pitfalls can help teams navigate the requirements phase more successfully.

One frequent mistake is vague or ambiguous requirements. Phrases like "data should be fast" or "data needs to be accurate" are unhelpful without quantifiable metrics. Ensure every requirement is specific, measurable, achievable, relevant, and time-bound (SMART). The template should guide toward this level of detail.

Another pitfall is skipping crucial details in the source-to-target mapping. It’s tempting to assume certain transformations or data types, but these assumptions can lead to significant issues downstream. Every single column mapping, data type conversion, and transformation rule must be explicitly documented.

Inadequate stakeholder involvement is also a major risk. If key business users or technical experts are not actively engaged in the requirements gathering process, critical insights or constraints may be missed. The template’s stakeholder section serves as a reminder to involve everyone necessary.

Ignoring data quality and error handling upfront can lead to compromised data integrity. Many teams focus solely on the happy path, neglecting to define how to handle malformed data, missing values, or system failures. Robust rules for validation and error management are non-negotiable for reliable data.

Finally, treating requirements as a one-time activity instead of an ongoing process can be detrimental. Data environments are dynamic; new systems emerge, business rules change. The data flow documentation should be regularly revisited and updated to reflect these evolutions, ensuring the ETL pipelines remain effective and relevant.

Frequently Asked Questions

What is ETL and why are its requirements complex?

ETL stands for Extract, Transform, Load, a process used in data warehousing to move data from various sources into a target system. Its requirements are complex due to the diversity of data sources (different formats, systems), the intricacy of business rules for data transformation (cleaning, aggregating, standardizing), and the need to ensure data quality, performance, and security throughout the entire process. Each step, from identifying the right data to defining how it should be manipulated, involves multiple stakeholders and detailed technical specifications.

Who should be involved in defining ETL needs?

A broad range of stakeholders should be involved to ensure comprehensive and accurate requirements. This typically includes business users or domain experts who understand the data’s meaning and purpose, business analysts who bridge the gap between business and technical needs, data architects who design the overall data landscape, data engineers or developers who will build the ETL pipelines, and IT operations teams who manage the infrastructure. Legal and compliance teams may also be necessary for sensitive data.

How does a template improve the process?

An ETL requirements gathering template improves the process by providing a standardized, structured framework for capturing information. It ensures that all critical aspects—from source system details to transformation logic and error handling—are systematically considered and documented. This standardization leads to greater clarity, reduces ambiguity, minimizes the chances of overlooked requirements, fosters better communication among teams, and ultimately leads to more robust, reliable, and cost-effective data solutions.

Can this template be adapted for cloud-based data integration?

Absolutely. While the core principles of ETL remain consistent, an ETL requirements gathering template is highly adaptable for cloud-based data integration projects. You might include specific sections for cloud service providers (AWS, Azure, GCP), serverless functions, API-based integrations, or managed data pipeline services. The fundamental components like source/target details, data mapping, and transformation logic are universal, but the method of implementation and specific technical considerations would be tailored to the cloud environment.

What’s the biggest mistake people make during requirements gathering?

The biggest mistake is often a lack of sufficient detail or specificity, leading to vague requirements. Teams might rush through the requirements phase, assuming that technical teams will “figure it out.” This leads to misinterpretations, extensive rework, and solutions that do not meet business needs. A robust template actively guides users to provide granular, explicit details for every aspect of the data flow, preventing these costly assumptions.

The journey of data from raw input to actionable insight is fraught with potential missteps, but the adoption of a formal Etl Requirements Gathering Template transforms this challenging journey into a well-lit path. It’s not merely about documenting; it’s about strategizing, communicating, and building a shared vision for data success. By investing time and effort into this foundational phase, organizations can unlock the true power of their data, transforming it from a mere collection of facts into a strategic asset that drives informed decisions and fosters innovation.

Embracing such a structured approach to defining your data integration requirements gathering needs is an investment that pays dividends far beyond the immediate project completion. It cultivates a culture of precision, accountability, and clarity, ensuring that every byte of data serves a purpose and contributes meaningfully to your organization’s goals. Start leveraging the power of a comprehensive template today, and lay the groundwork for a more efficient, accurate, and ultimately, more successful data future.