In today’s hyper-connected digital landscape, application performance isn’t just a technical metric; it’s a critical driver of user satisfaction, business revenue, and brand reputation. Slow loading times, unresponsive interfaces, or system crashes under peak demand can quickly erode user trust and send customers flocking to competitors. The stakes are incredibly high, making the proactive identification and mitigation of performance bottlenecks an absolute necessity for any organization deploying software.

Achieving robust, scalable, and responsive applications requires more than just functional correctness; it demands a deep understanding of how systems behave under various loads. This is where a well-defined and meticulously planned approach to performance testing becomes indispensable. Rather than scrambling to define test parameters on the fly, a structured document can guide your efforts. This article will explore the immense value of establishing a clear Load Testing Requirements Template, a foundational asset for anyone involved in developing, testing, or managing software projects.

Why a Structured Approach to Performance Testing Matters

The digital economy operates at an unforgiving pace, and users expect seamless experiences regardless of the time of day or the number of concurrent users. Without a deliberate strategy for performance validation, organizations risk costly outages, missed service level agreements (SLAs), and ultimately, a significant blow to their bottom line. Performance issues, if discovered late in the development cycle, can be exponentially more expensive and time-consuming to fix, often leading to project delays and resource overruns.

A structured approach to performance testing, guided by precise requirements, transforms a reactive firefighting exercise into a proactive risk mitigation strategy. It enables teams to define performance expectations from the outset, align stakeholders on critical metrics, and design tests that accurately simulate real-world usage patterns. This foresight empowers development teams to build performance into the architecture from day one and allows quality assurance professionals to conduct thorough, relevant, and repeatable load tests that uncover weaknesses before they impact end-users. It shifts the paradigm from "will it break?" to "how well will it perform under pressure?"

Key Benefits of Using a Load Testing Requirements Template

Adopting a standardized document for your performance testing needs offers a multitude of advantages that extend beyond mere test execution. It acts as a single source of truth, fostering clarity and consistency across all projects and teams.

- Consistency and Standardization: Ensures that every project adheres to a uniform set of guidelines for defining, executing, and reporting on load tests. This promotes best practices and reduces variations in testing quality.

- Comprehensive Coverage: A well-designed template prompts teams to consider all aspects of performance, from critical business transactions and expected user loads to system architecture and monitoring strategies. It helps prevent overlooking crucial non-functional requirements.

- Improved Stakeholder Alignment: Provides a clear framework for gathering input from business analysts, product owners, developers, and operations teams. This shared understanding of performance goals and test objectives minimizes misunderstandings and ensures everyone is working towards the same target.

- Reduced Rework and Delays: By capturing detailed performance requirements upfront, the template helps identify potential performance bottlenecks during the design and development phases, rather than discovering them right before launch. This early detection saves significant time and resources.

- Better Resource Allocation: With precise information on test scope, environment needs, and expected workload, teams can more effectively plan for and allocate testing resources, tools, and infrastructure.

- Clear Success and Failure Criteria: The template dictates specific Key Performance Indicators (KPIs) and thresholds, making it unambiguous when an application has met its performance targets or requires further optimization. This objective measurement is crucial for decision-making.

Core Components of an Effective Performance Test Plan

A robust **Load Testing Requirements Template** isn’t just a checklist; it’s a comprehensive blueprint for your performance validation efforts. While specific sections may vary slightly based on organizational needs, here are the fundamental elements that every effective performance test plan should encompass:

- Project Overview and Objectives: Define the application or system under test, its purpose, and the specific business goals that performance testing aims to support. What are the key performance questions you need to answer?

- Scope of Performance Testing: Clearly delineate what components, features, and user journeys will be included in the load tests, and just as importantly, what will be explicitly excluded.

- Application Under Test (AUT) Details: Provide an architectural overview, technology stack, third-party integrations, and any known dependencies that might influence performance. Include environment specifics like server configurations, database details, and network topology.

-

Non-Functional Requirements (NFRs): This is the heart of your performance test requirements. Quantify expectations for:

- Response Times: For critical transactions (e.g., login, search, checkout).

- Throughput: Transactions per second or requests per second the system must handle.

- Concurrency: The number of simultaneous users the system should support.

- Resource Utilization: Acceptable CPU, memory, disk I/O, and network usage.

- Scalability: How the system should behave when user load increases.

- Stability/Reliability: How the system performs over extended periods under sustained load.

- Test Scenarios and User Journeys: Detail the specific actions users will perform, mirroring real-world usage patterns. Prioritize critical business flows and outline the steps for each scenario.

-

Workload Model: Describe how the virtual users will interact with the system. This includes:

- User Distribution: Percentage of users executing each scenario.

- Peak Load: The maximum number of concurrent users to simulate.

- Ramp-up/Ramp-down: How quickly users are added or removed from the test.

- Think Times: Delays between user actions to simulate human behavior.

- Pacing: The rate at which transactions are executed.

- Test Data Requirements: Specify the type, volume, and variety of data needed for testing. Address data privacy, generation, and cleanup strategies.

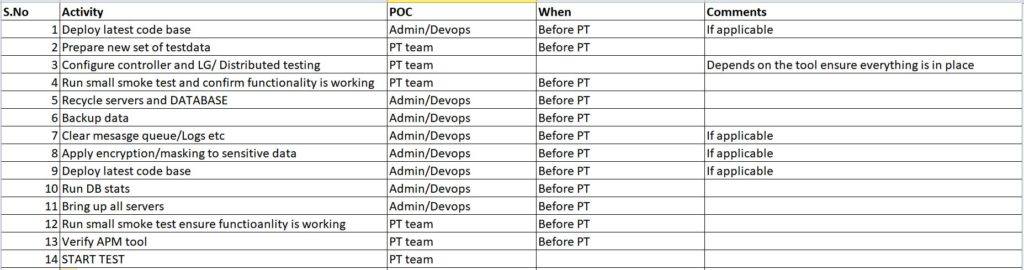

- Test Environment Setup: Detail the exact configuration of the test environment, ensuring it mirrors production as closely as possible. Include monitoring tools and reporting infrastructure.

- Success and Failure Criteria: Reiterate the quantitative KPIs from the NFRs, defining clear pass/fail thresholds for the performance test execution.

- Risks and Assumptions: Document any potential risks to the performance testing effort (e.g., environment availability, data constraints) and the assumptions made during planning.

- Reporting and Analysis: Outline the required format and content of test reports, including key metrics, performance graphs, and conclusions. Define how results will be analyzed and shared.

Crafting Your Performance Test Scenarios and Workload

Defining precise test scenarios and a realistic workload model is paramount for the success of your performance testing efforts. Test scenarios should be derived directly from real user behavior and critical business processes. Engage with product owners and business analysts to identify the most frequently used paths and the transactions that are most critical to your organization’s success. Document each step a user takes, including data inputs and expected outputs, ensuring these actions are as close to real-world interactions as possible.

The workload model, on the other hand, translates these scenarios into a measurable test plan. This involves determining the number of concurrent users, the distribution of those users across various scenarios, and the pace at which transactions are executed. Consider peak usage times, average daily load, and potential future growth when defining these parameters. Incorporating "think times" – pauses between user actions – is crucial for realistic simulation, preventing an artificial bottleneck at the application level. An accurate workload model helps ensure that your performance test plan truly reflects the demands your system will face in production.

Integrating Performance Testing into Your SDLC

Effective performance engineering isn’t a one-time event; it’s an ongoing discipline that should be woven into every stage of your Software Development Life Cycle (SDLC). By “shifting left,” organizations can identify and address performance issues much earlier, when they are less complex and costly to resolve. This begins with the very first stage, where the comprehensive definition of performance requirements using a standardized document plays a pivotal role.

During the requirements gathering phase, the structured approach provided by a template ensures non-functional requirements are captured alongside functional ones. In the design phase, architects can leverage these requirements to make informed decisions about system architecture and technology choices that support performance goals. Developers can then implement performance best practices and conduct localized unit performance tests. During the QA phase, comprehensive system-level load testing, guided by the agreed-upon performance test plan, validates the application’s readiness. Finally, in production, continuous monitoring ensures that real-world performance aligns with expectations and quickly identifies any regressions or new bottlenecks, allowing for continuous refinement of the performance test criteria for future iterations.

Tips for Maximizing Your Template’s Value

A well-designed template is a powerful tool, but its true value is unlocked through thoughtful application and continuous refinement. Here are some tips to ensure your performance test requirements document serves you optimally:

- Keep it Dynamic: Your template should be a living document, updated regularly to reflect changes in application architecture, business requirements, and performance expectations.

- Involve All Stakeholders: Performance is everyone’s responsibility. Ensure input from product owners, business analysts, developers, QA engineers, and operations teams is incorporated into the performance requirements.

- Start Simple, Iterate: Don’t try to perfect the template on day one. Start with the core components and gradually add more detail and sophistication as your team gains experience and identifies specific needs.

- Document Assumptions: Clearly state any assumptions made regarding user behavior, data volumes, or environmental stability. This helps in understanding test results and diagnosing discrepancies.

- Automate Where Possible: While defining requirements is a manual process, leverage automation for test execution, data generation, and reporting to enhance efficiency and repeatability.

- Review and Refine Regularly: Schedule periodic reviews of your performance testing requirements with the team to ensure they remain relevant, comprehensive, and actionable.

Frequently Asked Questions

What’s the difference between load testing and stress testing?

Load testing assesses system behavior under expected and peak user loads, verifying that the application meets defined performance criteria like response times and throughput. Stress testing, on the other hand, pushes the system beyond its normal operating capacity to determine its breaking point, identify failure modes, and evaluate how it recovers. Both are crucial for a complete understanding of system performance.

Who should be involved in defining performance requirements?

Defining comprehensive performance testing requirements requires collaboration from multiple roles. Key contributors include product owners (for business context and critical user journeys), business analysts (for non-functional requirements and KPIs), solution architects and developers (for system architecture and technical constraints), and QA engineers (for testability and execution logistics). Operations teams also provide crucial insights into production environment constraints and monitoring capabilities.

How often should we update our performance test plan?

Your performance test plan should be updated whenever there are significant changes to the application’s architecture, major feature additions, changes in expected user loads or business requirements, or after a major incident that reveals previously unknown performance bottlenecks. It’s also good practice to review and refine it at the beginning of each major development cycle or release to ensure it remains current and relevant.

Can a single template fit all projects?

While a core template can serve as an excellent starting point, it’s often beneficial to customize it for specific project types or application architectures. For instance, a template for a complex microservices application might require more detailed sections on inter-service communication and API performance, whereas a mobile application might focus more on network conditions and device-specific performance metrics. The goal is adaptability, not rigidity.

What are some common pitfalls in performance requirement gathering?

Common pitfalls include defining vague or unquantifiable requirements (e.g., “the system should be fast”), failing to involve all necessary stakeholders, neglecting to consider peak load scenarios or future growth, and not adequately documenting the test environment or test data needs. Another frequent mistake is defining requirements too late in the development cycle, making it difficult and expensive to implement performance improvements.

Embracing a systematic approach to defining your performance testing requirements is not merely a bureaucratic exercise; it’s a strategic investment in the quality, stability, and success of your digital products. A well-crafted and consistently utilized performance test plan empowers teams to build confidence in their applications, ensuring they can withstand the rigors of real-world usage and deliver exceptional user experiences.

By establishing clear expectations, documenting critical parameters, and fostering cross-functional collaboration, you transform performance testing from a daunting challenge into a predictable and manageable process. This proactive stance significantly reduces the risk of post-launch performance failures, protecting your brand reputation and securing customer loyalty. Start building your comprehensive performance test plan today, and lay the groundwork for a robust and resilient application architecture that stands the test of time and traffic.