In the complex and often deeply personal world of mental healthcare, understanding whether our interventions are truly making a difference isn’t just good practice—it’s an ethical imperative. Programs designed to support mental wellness, from community outreach initiatives to school-based counseling, represent significant investments of time, resources, and hope. Yet, without a clear, systematic way to assess their effectiveness, these programs risk operating in a vacuum, unable to prove their worth, secure ongoing funding, or—most importantly—continually improve the lives they aim to serve.

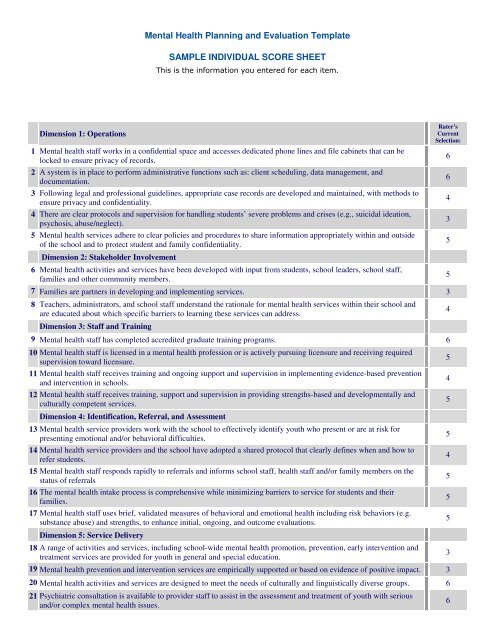

This is precisely where a well-crafted Mental Health Program Evaluation Template becomes an indispensable tool. It transforms the abstract idea of "assessment" into a concrete, actionable framework, providing a roadmap for organizations to systematically gather data, measure outcomes, and demonstrate impact. Far from being a rigid checklist, this template is a dynamic guide, designed to empower mental health professionals, administrators, and stakeholders to objectively evaluate their efforts and ensure they are truly meeting the needs of their communities.

Why Program Evaluation is Non-Negotiable in Mental Health

The landscape of mental health services is constantly evolving, driven by new research, changing societal needs, and shifts in funding priorities. In this dynamic environment, robust program evaluation isn is not merely a bureaucratic requirement; it’s a cornerstone of responsible and effective practice. For any organization dedicated to improving mental well-being, a commitment to rigorous assessment offers multi-faceted benefits.

Firstly, evaluation provides crucial accountability. Stakeholders, including funders, government agencies, and the public, demand evidence that resources are being used wisely and achieving intended outcomes. A strong evaluation framework allows programs to transparently demonstrate their impact, build trust, and justify continued investment. Secondly, it drives continuous improvement. By systematically collecting and analyzing data, organizations can identify what’s working, what’s not, and why. This invaluable feedback loop enables program leaders to refine interventions, address gaps in service, and adapt to emerging challenges, ensuring that services remain relevant and effective.

Beyond accountability and improvement, evaluating mental health programs helps to build the evidence base for effective interventions. When programs rigorously document their methods and outcomes, they contribute to the broader body of knowledge, informing best practices and helping other organizations replicate successful models. Ultimately, effective program assessment ensures that mental health initiatives are not just well-intentioned, but also genuinely transformative, leading to better outcomes for individuals and stronger, healthier communities.

Decoding the Core Components of an Effective Evaluation

Developing a comprehensive framework for assessing mental health initiatives requires careful consideration of various elements. A robust program evaluation framework isn’t just about collecting data; it’s about collecting the *right* data in a meaningful way. While specific details will always be customized to the program, several core components form the backbone of any effective assessment approach. These elements ensure a holistic understanding of the program’s design, implementation, and impact.

Here are essential components to integrate into your program assessment framework:

- Program Description: A clear, concise overview of the program, including its mission, vision, and the specific problem it aims to address. This sets the context for the entire evaluation.

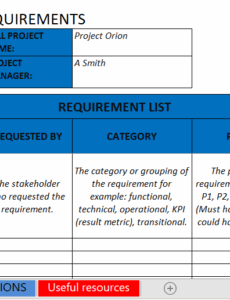

- Goals and Objectives: Precisely defined, measurable goals and objectives. These should be SMART (Specific, Measurable, Achievable, Relevant, Time-bound) and directly link to the desired changes in mental health outcomes.

- Target Population: A detailed description of the individuals or communities the program intends to serve, including demographics, prevalence of conditions, and specific needs.

- Intervention Design: A thorough explanation of the services, activities, and strategies employed by the program. This outlines *how* the program intends to achieve its objectives.

- Logic Model: A visual representation of how the program is supposed to work, linking inputs, activities, outputs, and short-term, intermediate, and long-term outcomes. This is crucial for understanding causal pathways.

- Evaluation Questions: Specific questions the evaluation aims to answer. These often fall into categories like process (e.g., Was the program implemented as planned?), outcome (e.g., Did participants’ symptoms decrease?), and impact (e.g., Did community mental health improve?).

- Data Collection Methods: The tools and strategies used to gather information, which may include surveys, interviews, focus groups, clinical assessments, existing administrative data, or observations. Specify both quantitative and qualitative methods.

- Key Performance Indicators (KPIs): Specific metrics chosen to measure progress toward objectives. For mental health, these could include symptom reduction scales, treatment adherence rates, participant satisfaction, or improvements in social functioning.

- Data Analysis Plan: How collected data will be analyzed to answer evaluation questions. This includes statistical methods for quantitative data and thematic analysis for qualitative data.

- Ethical Considerations: Procedures for ensuring participant privacy, informed consent, data security, and cultural sensitivity throughout the evaluation process. This is paramount in mental health research.

- Dissemination Plan: How evaluation findings will be communicated to stakeholders, including reports, presentations, and publications. This ensures the results are used to inform future decisions.

- Timeline and Resources: A realistic schedule for evaluation activities and an outline of the budget and personnel required.

Tailoring Your Template: Customization and Adaptability

While a structured assessment framework provides an invaluable foundation, its true power lies in its adaptability. No two mental health programs are identical; they operate in different contexts, serve diverse populations, and address unique challenges. Therefore, any effective mental health program evaluation template must be flexible enough to be tailored to specific program needs, rather than imposed as a rigid, one-size-fits-all solution.

The process of customizing your program assessment tools begins with a deep understanding of the program itself. Consider its scope—is it a small, local support group, or a large-scale, multi-site telehealth initiative? The evaluation questions and data collection methods for a school-based resilience program will differ significantly from those for an intensive outpatient therapy service for adults. Involve key stakeholders—program staff, participants, community partners, and funders—in the customization process. Their insights are crucial for identifying relevant outcomes, feasible data collection strategies, and culturally appropriate measures.

Furthermore, recognize that the evaluation framework can evolve. As a program matures, or as new data emerges, the evaluation questions or metrics may need adjustment. For instance, an initial evaluation might focus on process measures (e.g., attendance, satisfaction), while later evaluations might delve deeper into long-term impact and sustainability. Embracing this iterative approach ensures that your program assessment remains relevant and maximizes its utility, ultimately serving as a living document that guides continuous improvement rather than a static report.

Putting It Into Practice: A Step-by-Step Approach

Transitioning from theory to practical application is where the real work begins. Utilizing a structured approach for mental health program evaluation requires careful planning and execution. It’s about more than just filling in blanks; it’s about embedding evaluation into the very fabric of your program’s operations. This systematic process ensures that your assessment efforts yield meaningful insights and lead to tangible improvements.

- Define Your "Why": Before anything else, clarify the primary purpose of your evaluation. Is it for funding renewal, program improvement, demonstrating impact to the community, or building the evidence base? Your "why" will shape your evaluation questions and methodology.

- Engage Stakeholders Early: Bring together program staff, administrators, participants (where appropriate), and funders. Their perspectives are vital in defining relevant outcomes, identifying challenges, and ensuring the evaluation is perceived as helpful, not just burdensome.

- Refine Program Logic and Goals: Revisit your program’s logic model and ensure its goals and objectives are clear, measurable, and directly linked to desired mental health outcomes. This ensures you’re measuring what truly matters.

- Select Appropriate Measures and Tools: Choose data collection instruments (surveys, interviews, clinical scales) that are valid, reliable, culturally sensitive, and feasible to administer. Consider a mix of quantitative (e.g., symptom scores, attendance) and qualitative (e.g., participant narratives, focus group feedback) data.

- Develop a Data Collection Plan: Outline who will collect what data, how it will be stored, and when. Ensure staff are properly trained, and privacy protocols are strictly adhered to, especially when dealing with sensitive mental health information.

- Analyze and Interpret Data: Once data is collected, employ appropriate analytical methods. Look for patterns, trends, and discrepancies. Don’t just present numbers; interpret what they mean in the context of your program’s goals and the broader mental health landscape.

- Report Findings Transparently: Present your results clearly and concisely to all relevant stakeholders. Highlight successes, identify areas for improvement, and offer actionable recommendations. Use accessible language and visuals to convey complex information.

- Utilize Findings for Action: The ultimate goal of any program evaluation for mental health initiatives is to inform decisions. Use the insights gained to make data-driven adjustments to your program, advocate for resources, or replicate successful strategies. This completes the continuous improvement cycle.

Maximizing Impact: Beyond the Checklist

An effective Mental Health Program Evaluation Template is more than just a set of fields to complete; it’s a strategic framework for cultivating excellence and ensuring genuine impact. While the structured approach provides clarity and consistency, the true value emerges when organizations look beyond the mere checklist and embrace evaluation as an ongoing, iterative process deeply integrated into their mission. This perspective allows for continuous learning and adaptation, which is vital in the ever-evolving field of mental healthcare.

By meticulously tracking outcomes, collecting participant feedback, and analyzing intervention efficacy, programs can move from anecdotal success stories to evidence-based assertions of impact. This rigor not only strengthens funding applications and demonstrates accountability to benefactors but, more importantly, fosters an environment of constant improvement that directly benefits the individuals receiving care. It allows mental health professionals to understand nuances in their services, adapt to diverse client needs, and innovate new approaches based on solid data, rather than assumptions.

Ultimately, a well-utilized evaluation framework for program assessment transforms data into insights, and insights into action. It empowers organizations to refine their services, articulate their value proposition with confidence, and make informed decisions that lead to better mental health outcomes for communities. By committing to systematic program evaluation, we ensure that our efforts are not just well-intended, but truly effective, creating lasting positive change in the lives of those navigating mental health challenges.